TikTok is punishing kindness while letting hatred roam free with their TikToks broken moderation

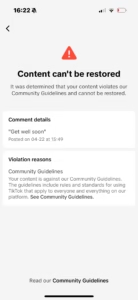

If you’ve been hit with a “Content Can’t Be Restored” warning for saying something as harmless as “Get well soon,” you’re not alone.

Thousands of users are waking up to a moderation system that’s not just broken. It’s rigged.

It rewards rage, suppresses empathy, and punishes you for playing by the rules.

Let’s break down how we got here.

And what you need to know to survive it.

TikTok’s Moderation Logic Is Backwards

The algorithm isn’t looking for meaning.

It’s scanning words.

Context doesn’t matter.

Intent doesn’t matter.

You could be offering genuine support to someone — and still get flagged.

Meanwhile, users spewing slurs, threats, and bile often get a free pass.

When your moderation system can’t tell the difference between “hope you feel better” and “die slow,” you’ve got a catastrophe on your hands.

And TikTok has decided to call it “community protection.

Welcome to the future of broken platforms.

Why Good Behavior Gets Punished

TikTok’s moderation isn’t just clumsy.

It’s strategically clumsy.

Flagging benign comments like “get well soon” allows TikTok to:

- Claim they’re proactively moderating “problematic language.”

- Inflate their takedown numbers to impress regulators.

- Dodge accusations of not taking community safety seriously.

Meanwhile, actual hate speech often stays up because it drives more engagement, more controversy, and more time spent on the app.

TikTok doesn’t want healthy communities.

They want endless cycles of outrage they can monetize.

Appeals Don’t Work (And They’re Not Meant To)

When you appeal a wrongful takedown on TikTok, you’re not triggering a real human review.

You’re entering a digital echo chamber.

Your comment is analyzed by the same broken AI that flagged it in the first place.

This system isn’t built for justice.

It’s built for efficiency.

A fast, automated denial saves TikTok time and money.

And it buries your complaint under a mountain of algorithmic noise.

Why Hate Speech Often Gets a Free Pass

Hate content, however vile, sparks emotional reactions.

Comments.

Shares.

Outrage.

More time on platform.

More eyes on ads.

More revenue for TikTok.

It’s cold math.

The more they “fail” to stop hate, the more they profit.

Deleting empathy is just a side effect.

The Double Standard Is Obvious

You can get banned for calling someone “strong” after a breakup post.

You can stay active after mocking someone’s ethnicity.

You can lose your account for defending someone.

You can thrive while calling for violence.

These aren’t isolated incidents.

They are the pattern.

They expose what TikTok values.

Why TikTok Prefers Automated Chaos

Manual moderation would be slow.

Expensive.

Demand real accountability.

AI is faster.

Cheaper.

Easier to blame when it screws up.

An AI that punishes good users while boosting hateful engagement is ideal for a platform that only cares about numbers.

If real people reviewed reports, half the top comment sections would vanish overnight.

TikTok won’t risk that.

Real Stories of Insane Moderation

- One user reports a violent death threat: “No violation found.”

- Another wishes someone a “speedy recovery”: account warning issued.

- Gore livestreams slip through moderation for hours.

- Jokes among friends flagged as “harassment” within minutes.

The system is irrational on purpose.

It keeps you anxious.

It keeps you guessing.

And it keeps you hooked.

The Bigger Risk Nobody Talks About

Moderation errors aren’t just personal frustrations.

They reshape online culture.

They teach users that:

- Kindness is dangerous.

- Silence is safer.

- Outrage gets rewarded.

In time, this warps how people interact everywhere online.

It’s not just TikTok users who suffer.

It’s the entire digital generation growing up under these twisted incentives.

How Users Are Fighting Back

Some are quitting TikTok.

Many are diversifying.

Smart users are:

- Backing up their accounts and content.

- Building email lists with Systeme so TikTok can’t silence them overnight.

- Using external schedulers like Blaze AI to automate posts while avoiding burnout.

- Moving serious discussions to Discord servers, private communities, and off-platform spaces.

The strongest users aren’t leaving.

They’re decentralizing.

They’re preparing for the day TikTok cracks down even harder.

And they’ll survive it.

What TikTok Won’t Admit Publicly

They won’t tell you that empathy is dangerous on their platform.

They won’t explain why reporting gore fails but joking about “eating all the snacks” gets flagged.

They won’t acknowledge that their rules are selectively enforced to maximize profit, not community safety.

Every “oops” is profitable.

Every “error” earns them another million minutes of engagement.

TikTok won’t stop.

Not unless it hurts their bottom line.

And by then, it might be too late.

What You Can Do About It

Protect your voice.

Protect your work.

Protect your future.

Take control by:

- Saving important comments and posts to external drives.

- Building communities on platforms you own.

- Using proxies like Social Proxy to prevent device profiling and shadowbans.

- Scheduling outside of TikTok so your content strategy isn’t ruined when the app misfires.

- Speaking carefully but without losing your authenticity.

Every smart move you make today reduces the leverage TikTok holds over you tomorrow.

Why This Matters More Than You Think

TikTok isn’t just deleting random comments.

They’re reshaping human behavior.

They’re training people to fear kindness.

They’re rewarding division, anger, and conflict.

They’re telling you, through algorithms and takedowns, that empathy is “suspicious” but mockery is “safe.”

If that doesn’t terrify you, it should.

Because once platforms succeed in killing empathy online, the effects don’t stay virtual.

They bleed into real life.

Into how we talk.

How we treat each other.

How we see strangers.

This isn’t just about losing your account.

It’s about losing your humanity one flagged comment at a time.

Final Word

Saying “get well soon” shouldn’t get you punished.

But on TikTok, it does.

Because TikTok doesn’t want thoughtful users.

It wants reactive consumers.

You can’t fix their broken priorities.

But you can build around them.

You can stay smarter, sharper, and more independent.

You can refuse to let their algorithms rewrite your humanity.

And when they crack down harder — because they will — you won’t be caught off guard.

You’ll already be free.

Because you planned for the collapse.

And you chose resilience over rage.

Pingback: TikTok Shop Vouchers: How to Outsmart the System and Maximize Your Savings - Social Tips Master

Pingback: TikTok Is Banning Users for Saying ‘Ew’ — While Bots and Hate Speech Thrive - Social Tips Master