TikTok used to be where people escaped the chaos. Now it’s the chaos. Open the app and you’re hit with a flood of toxic comments, divisive content, and casual racism dressed up as “engagement.” It doesn’t matter what side you’re on.

A video of a smiling baby gets turned into a warzone. A wedding clip becomes a breeding ground for “don’t mix” jokes. And when users try to clean it up — report it, block it, hit “not interested” — the algorithm just doubles down. It’s not just the content. It’s the design. Ragebait works. But is TikTok Racist

TL;DR:

-

TikTok users report a rise in racist, ragebait content since late 2022 — and it’s getting worse in 2025.

-

The algorithm seems to favor polarizing videos and hateful comment threads for engagement.

-

Reporting racist content often leads to “no violations found,” while harmless comments get removed.

-

Many believe the system is either broken or intentionally ignoring hate for clicks.

Some users are quitting entirely, calling TikTok a “burning trash fire” of algorithmic division.

1. When Your FYP Turns Into a Racist Hellscape

You’re just trying to vibe.

Maybe watch a haircut tutorial. A funny skit. A dog falling into a pool.

But instead, TikTok serves you back-to-back videos with subtle — or sometimes blatant — racism baked in. And the worst part? It knows what it’s doing. These aren’t just random viral clips. The platform pushes what gets comments, even if those comments are vile. Because the algorithm doesn’t care why people are engaging. It only cares that they are.

Creators in the thread pointed out that even sweet, wholesome videos — a black toddler smiling, an interracial wedding, someone promoting Black art — immediately become bait for racist trolls. The same recycled comments appear every time: “13%,” “mud blood,” “single mother moment.” You scroll. You see it again. And again. Until it feels like the app is training you to hate people. Or at the very least, get used to others hating them.

This isn’t a new problem either. Some users say it started ramping up in late 2022. Others blame the post-Trump TikTok user wave. But one thing is clear — the platform has built an ecosystem where racist engagement is rewarded and empathy gets you flagged.

TikTok doesn’t need to be explicitly racist. It just has to keep showing you enough hateful garbage to get a reaction. And based on the thread? It’s working.

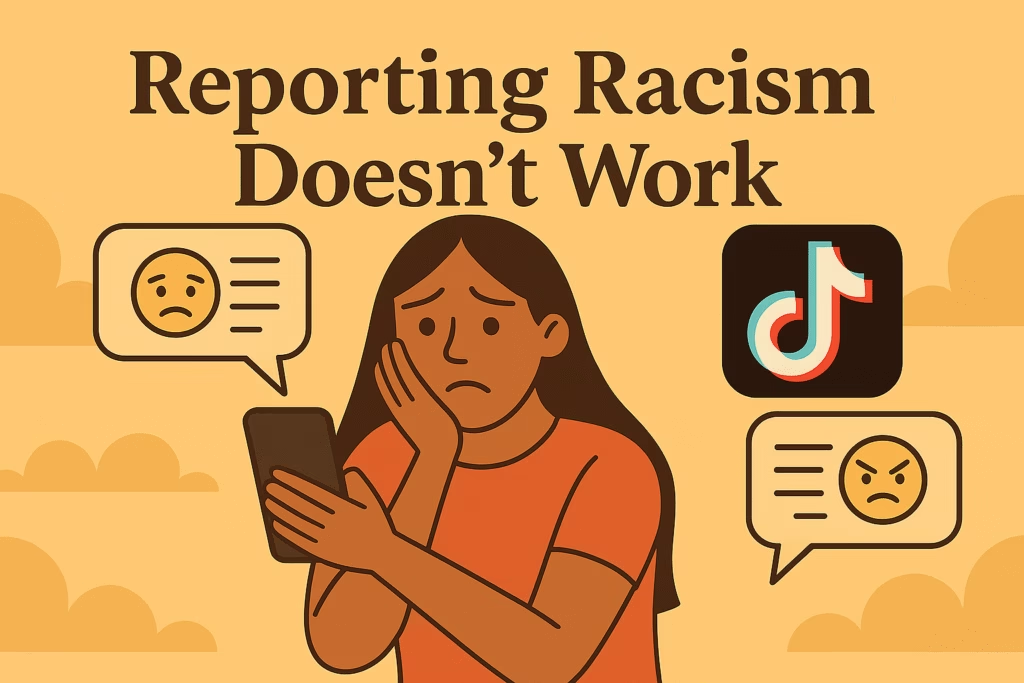

2. Reporting Racism Doesn’t Work — And Sometimes Backfires

TikTok tells you to report hate. But when you do? Nothing happens.

Multiple users in the thread said they’ve reported overtly racist comments — slurs, dehumanizing jokes, flat-out hate speech — only to be told “no violation found.” Meanwhile, people who respond with something mild like “That’s dumb” or try to educate the trolls get their comments removed. Or worse: shadowbanned.

It’s not just inconsistent. It’s demoralizing.

And it signals one thing loud and clear: TikTok doesn’t care who’s getting attacked — as long as the comment section keeps moving.

One user said they got flagged for holding a marker in their mouth while hanging a photo. Another got banned just for responding logically to racist bait. Others were flagged for “bullying” because they called out white supremacy. It’s like the algorithm has a twisted sense of morality: racism is fine, but challenging racism is too spicy for the platform’s delicate little circuits.

And let’s be real — this doesn’t feel like a bug. It feels like a feature.

Keeping hateful content up brings more replies, more outrage, more scrolling. It’s the algorithm’s favorite drug: chaos. TikTok is built for engagement, not ethics. And that’s a dangerous foundation when your content moderation system is run by bots and indifference.

3. How TikTok Uses Ragebait to Boost Engagement

Let’s call it what it is: ragebait is the new retention strategy.

TikTok doesn’t just allow controversial content. It promotes it. The system is designed to serve you whatever makes you feel something — even if that “something” is pure disgust. You might not like a video, but if you watch it twice, leave a comment, or share it out of frustration? That’s gold to the algorithm.

And guess what kind of content almost always triggers that response? Racist garbage.

Users in the thread shared that even after resetting their accounts, creating new ones, avoiding political content, and only engaging with chill videos… the racism still crept back in. Not just in content — but in the comments. It’s as if TikTok is testing you: How long before you bite? Before you reply? Before you get angry?

One user put it bluntly: “Every time I see a cute video with a Black baby, I know the comments will ruin it.” Another said their FYP started fine — then spiraled into hateful videos after watching just one racially charged clip. The message is clear: TikTok is always one swipe away from feeding you rage.

This isn’t accidental. It’s engineered.

By making hate visible and responses inevitable, TikTok creates a cycle where outrage becomes the fuel. The algorithm doesn’t distinguish between love and hate — it just wants engagement. That’s why “don’t mix” trends are allowed to go viral. That’s why racist memes get millions of views. That’s why creators talking about real issues get silenced while trolls keep their platforms.

It’s rage as a business model. And TikTok’s thriving on it.

4. Users Are Quitting — And Not Just Quietly

For some, the racism was just the last straw.

Plenty of creators in the thread didn’t just complain — they left. Deleted their accounts. Nuked their FYPs. Moved to YouTube Shorts or just logged off entirely. Because no amount of “not interested” taps can fix an algorithm that thrives on conflict.

And when people leave, they don’t go quietly.

They go on Reddit. They rant in comment sections. They warn others: “It’s not worth it anymore.” Not because of low views or bad content — but because TikTok has become emotionally exhausting. It’s supposed to be fun. Instead, it’s a daily feed of hate, stupidity, and chaos disguised as entertainment.

One user said they couldn’t even enjoy a grilled cheese post without being flagged for “suicidal content.” Another said they were banned without ever posting — just for existing. The platform’s moderation is broken, backwards, or straight up trolling at this point.

It’s not just creators rage-quitting, either. Regular users are waking up too. The toxic cycles, the endless performative outrage, the double standards — it adds up. TikTok isn’t just a content app anymore. It’s a mood manipulator. And a lot of people are done letting it mess with their head.

You can only be fed garbage for so long before you stop coming to the table.

5. Is This Just TikTok — or a Mirror of the Internet?

You could argue this isn’t just TikTok. That every platform eventually gets infected.

Reddit, Twitter, Facebook — they’ve all gone through waves of toxicity. But the difference with TikTok? It’s faster, more subtle, and more emotionally wired. TikTok doesn’t give you threads. It gives you a dopamine drip. One swipe. One comment. One trigger. Then it fine-tunes what makes you stay.

That’s where things get dangerous.

Because most users don’t realize they’re being manipulated until it’s too late. The algorithm watches how long you linger on a post, not whether you liked it. So if you’re staring at a racist video because you’re stunned, confused, or pissed? TikTok thinks you’re interested. And now it’s part of your feed. Forever.

Other platforms at least make their chaos visible. You can see the battleground on Twitter. On TikTok, it’s hidden behind cute thumbnails and upbeat audio.

But maybe that’s the internet now. Ragebait gets clicks. Division gets comments. Outrage builds brand.

Still, there’s a difference between reflecting the internet and amplifying its worst instincts. TikTok isn’t just letting the garbage in — it’s turning the volume up. And that’s not just irresponsible. It’s calculated.

So no — this isn’t just TikTok being a mirror. It’s TikTok being a megaphone.

6. What Can You Actually Do About It

Let’s be real. You can’t “fix” TikTok.

Not from the outside. Not as one user. You’re not going to outsmart an algorithm designed by teams of engineers and trained on trillions of interactions. But that doesn’t mean you’re powerless.

Here’s what actually works:

-

Stop engaging. Even hate-watching is engagement. That comment you leave to call someone out? That’s fuel. You’re feeding the machine, not beating it.

-

Block liberally. Don’t argue. Don’t educate. Block. Block the creator, block the commenters, block entire trends if you have to.

-

Use “Not Interested” like a weapon. Spam it. Every time something smells like ragebait, hit it. Even if it feels useless, repeated signals eventually steer the algo.

-

Reset your feed. Start over with a fresh account or clear your preferences. But know this: if you’re not intentional about what you engage with, the trash will crawl back.

-

Speak up somewhere else. TikTok doesn’t listen to reports — but Reddit does. Articles like this one do. If you’ve got receipts, stories, patterns — share them. You’re not crazy. You’re not alone.

And if it all becomes too much? Log out. Delete the app. Reclaim your brain.

TikTok wants your attention, even if it has to poison your mood to get it. Don’t give it that power.

7. But Is TikTok Doing This on Purpose?

That’s the question no one wants to say out loud — but everyone’s thinking it.

Is this just a side effect of bad content moderation? Or is TikTok intentionally feeding you hate to keep you addicted?

Let’s look at the patterns.

The content that gets removed? Educational replies. Sarcastic callouts. Comments telling trolls to shut up.

The content that stays? Slurs, dehumanizing memes, and ragebait videos so inflammatory they could’ve been cooked in a troll farm. Add to that the fact that TikTok knows what you’re watching, rewatching, skipping, or silently reacting to.

This isn’t some blind AI blundering through the internet. It’s one of the most sophisticated behavioral tracking systems ever built — and it just happens to keep pushing the most toxic, polarizing content?

Come on.

Users in the thread noticed the pattern. Engage once — even accidentally — and the feed warps around it. You can make a new account. Clear your history. Avoid political content. Doesn’t matter. The algorithm still finds its way back to the worst stuff.

So maybe it’s not a bug. Maybe it’s a strategy.

Hate gets comments. Comments drive reach. Reach builds retention. Retention feeds ad dollars. And unless public pressure mounts — unless enough people call it out — TikTok has zero incentive to change.

It’s not broken. It’s profitable.

8. If You Stay on TikTok, Stay Smart

You don’t have to delete TikTok. But you do need to stop using it passively.

Because if you’re not choosing what to watch, the app is choosing for you. And spoiler: it’s not choosing what’s good for your brain.

The algorithm isn’t your friend. It’s not here to entertain you, inform you, or help you grow. It’s here to trap you in loops of engagement — and it doesn’t care if those loops are made of outrage, racism, or depression. If it keeps you scrolling, it’s working.

So if you’re staying? Be ruthless.

Curate your feed like your mental health depends on it — because it kinda does.

Block content that drags you down. Avoid comment sections like they’re radioactive. Use your For You Page as a tool, not a mirror.

And know when it’s time to walk away.

There are still pockets of good content on TikTok. Creators doing real work. Accounts sharing joy, humor, creativity. But they’re buried under a system that rewards hate and chaos. If you’re not actively fighting back — by how you scroll, what you engage with, and when you log off — then you’re letting the machine think you want more.

And trust me — you don’t want more of what TikTok’s serving lately.